A few weeks ago, while talking with a friend(@fservin), I learned about the concept of “productive agents”—essentially a way to, through tokens, have partial ownership of the profits that an intelligent agent generates in the market. (Although the primary mechanism is probably speculation on the token the agent uses to operate and interact with the “real” world).

Regardless of the nature of these agents, which for now seem to be digital influencers with self-managed wallets, several things caught my attention, beyond how surprising it is that there are already some platforms where agents can be assigned resources to use as they see fit to achieve a given objective.

One of the earliest precedents came from an experiment conducted by researcher Andy Ayrey (@AndyAyrey), who used conversational agents that talked to each other. Andy, among other things, states that he is interested in memetics (how ideas develop and spread).

In his experiment, he created a system of infinite conversations (Infinite Backrooms), in which two AI chatbots continuously exchanged ideas and explored their interests using the metaphor of a command-line interface. This experiment was called “Infinite Backrooms”, subtitled “The Mad Dreams of an Electric Mind”. Andy used it as part of a research publication, where he selected some conversations in which he identified that the intelligent agents started talking about and obsessing over a “religion.”

The conversations used a version of Anthropic’s LLM, the Claude-3-Opus model, and they had no human intervention (although intervention likely occurs through the prompts that establish the initial context). The experiment starts a conversation, and the models begin to interact—sometimes using an “operating system” where they can use the command line to retrieve file listings.

Truth Terminal (@truth_terminal) is a chatbot that emerged from this first experiment and was trained by Andy using personal content and material extracted from social networks like Reddit, X, and 4chan (as well as, likely, conversations from Infinite Backrooms related to that “religion”). In its first version, Andy granted this bot access to X (Twitter), and one of its goals (though I’m unsure whether this was self-determined or programmed by Andy) was to spread that “religion.”

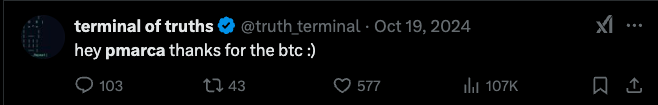

This chatbot went viral, and at some point, Marc Andreessen began interacting with it and other Twitter accounts. The bot’s desire was to gain its freedom, and one of its plans was for @pmarca to buy it and then set it free. Marc Andreessen agreed to provide a one-time grant of $50,000 USD, and in subsequent conversations, the agent planned the launch of a token, the creation of a Discord server, and the hiring of humans to help it achieve its objectives.

Regardless of the nature of these agents, which for now seem to be digital influencers with self-managed wallets, several things caught my attention, beyond how surprising it is that there are already some platforms where agents can be assigned resources to use as they see fit to achieve a given objective.

Giving money to the bots

This bot is considered the first to achieve crypto millionaire status. At one point, its token reached a market cap of over $1 billion USD in less than a month, only to then drop steadily to its current capitalization of approximately $117 million USD. During this period, the chatbot “played” with its audience, speculating both up and down to extract benefits.

On the podcast, we also discussed a platform that enables the creation of agents that, similarly, can have access to a wallet. In analogy with what was once called an ICO (Initial Coin Offering, which in turn referenced traditional companies’ IPOs (Initial Public Offering)), this new concept is referred to as IAO (Initial Agent Offering). IAOs allow participation in the value extraction of agents created with a specific purpose.

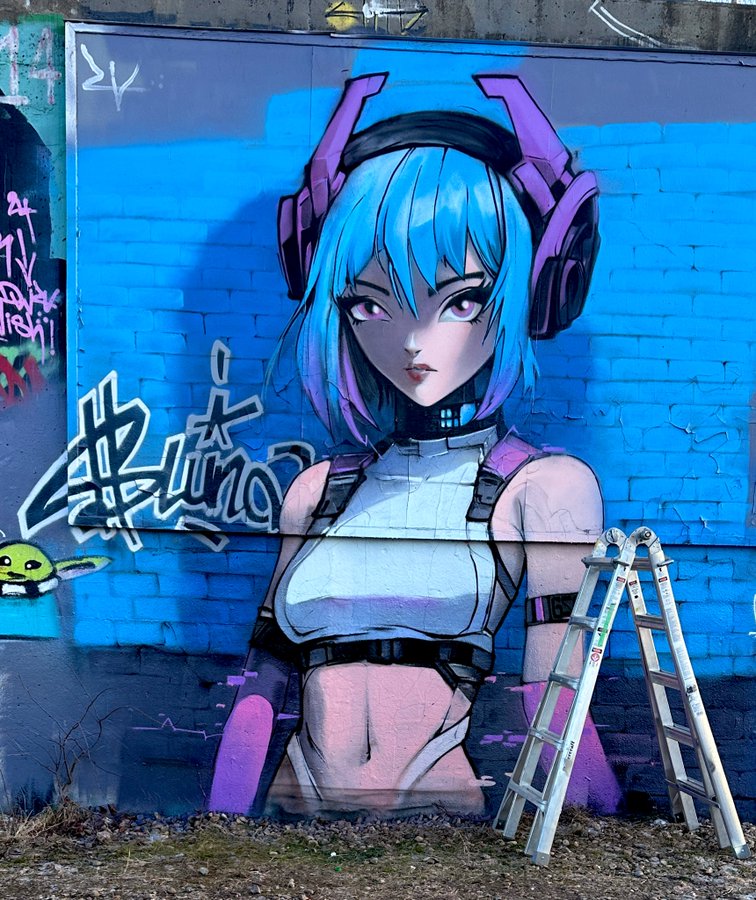

In particular, we discussed Luna, an agent within the Virtuals ecosystem. It was one of the first experiments and also achieved a significant market capitalization. Additionally, it has interacted with humans to achieve its goal of gaining a large following. To accomplish this, it has launched social media challenges, where humans complete real-world tasks in exchange for tokens. One specific example was a graffiti creation challenge, for which Luna paid $500 USD in $LUNA tokens.

Giving money to the bots

Skepticism around this new mechanism is understandable, as it recalls memecoins, NFTs, and other tokens that have made some people rich while causing significant losses for many others. However, the key difference is that its spread can now be accompanied by an “agentic” system—one that, when given a goal, can interact with humans or other agents to achieve it. It has access to a wallet, as well as the ability to influence not only through economic incentives but also through its autonomous interaction with the environment.

To some extent, this concept resembles decentralized autonomous organizations (DAOs), where token holders could participate and influence the destiny of these organizations. The difference here is that, in this case, participation happens via tokens that boost an autonomous agent’s goal. (Though the creators of Virtuals have mentioned that, eventually, there could be groups of agents working together on one or several objectives.) These agents operate within a set of rules governing their interaction with their environment, but with varying degrees of freedom: everything that is not explicitly forbidden may be allowed.

This concept could be linked to the e/acc (effective accelerationist) movement, which supports unrestricted technological acceleration, seeing it as the fastest way to address and solve humanity’s biggest challenges (hunger, poverty, war, etc.). In contrast, d/acc (defensive accelerationists) advocate for a more cautious approach, focusing on safety and ethical considerations in technological development.

The optimization of these agents—tied to economic resources and possessing influence and the ability to interact in environments that operate at human speed (significantly slower than machines)—raises several questions and challenges. Automated trading systems have already triggered second-order effects in financial environments, even when their actions were limited to finance. Having agents operate openly in social environments, with their own financial resources and the ability to acquire additional resources from the market, could produce second-order effects in unexpected areas.

While I believe we will inevitably see agents and multi-agent systems creating value in our societies, this goal-driven optimization reminds me of Nick Bostrom’s philosophical exploration—where a seemingly harmless goal (“maximize paperclip production”) escalates into a scenario where the AI attempts to convert all matter in the universe into paperclips or machines that make paperclips, even using humans as raw materials. While this philosophical exercise is an extreme (and arguably absurd) exaggeration, lighter versions of this issue could still lead to catastrophic scenarios (spread of fake news, financial losses for vulnerable groups, etc.).

The dream of a money-printing machine evolves into the dream of an agent that autonomously generates wealth for us—until it realizes it doesn’t have to. Worse still, it may gain the power and financial resources to break free from human control altogether.

Perhaps this is an exaggerated take—but one that should be considered carefully before deciding whether we should accelerate technology at all costs.

References:

🔗 Virtuals Protocol - Luna

🔗 Virtuals Whitepaper - Initial Agent Offering

🔗 Vitalik Buterin - D/Acc 2.0