At the 2025 CES event, NVIDIA’s keynote speaker Jensen Huang discussed AI’s progress over the years and explored scaling laws that offer a glimpse into AI’s future.

One of the concepts is called Test-Time-Reasoning and after reading a little about this concept a few times, I wanted to write briefly to understand and hopefully help understand this concept using a few analogies. Be warned I am strictly following an intuition and this might not completely represent the way Test-Time-Reasoning actually works (I’ll leave a couple of reference in case you want to dive deeper into other AI writers).

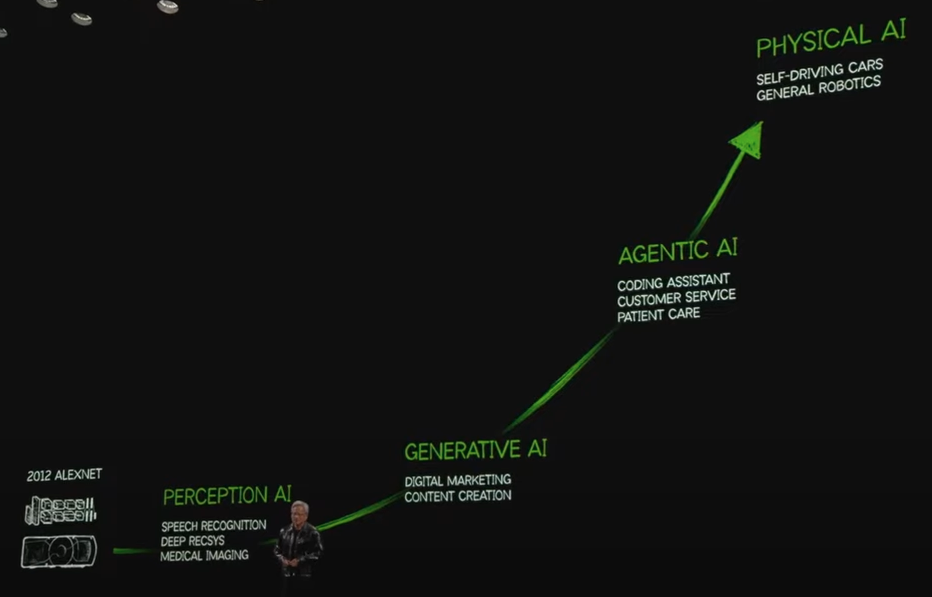

So first let’s see how AI has evolved and what’s expected to have in the “near” future.

AI Evolution

As we can see, this graph starts at 2012 with Alexnet, a Convolutional Neural Network implemented by Alex Krizhevsky, Ilya Sutskever and Geoffrey Hinton at the University of Toronto, one of the breakthroughs was using GPUs instead of CPUs for the training, this model won (by large) a Visual Recognition Challenge and is a major milestone into image models and GPU training. A frequent note we have to make is that AI didn’t start there, i.e. Geoffrey Hinton wrote a paper in 1986 alerady talking about the backpropagation algorithm that was used on neural networks training, but even before that some precursors to this algorithm were already used on optimization tasks, so there’s no overnight success story here, but a story where countless researchers worked tirelessly to reach our current state.

In the graph we can see the next milestones achieved:

-

Perception AI: that allowed some applications like speech recognition, deep recsys (recommendation systems) and medical imaging, basically allowing a machine to “understand” images, words and sounds to provide some output (is there a tumor in this image? which product will this particular user might actually buy? what’s the text transcription of this audio file from someone talking on the phone).

-

Generative AI: this is the stage we are just coming from, and will probably keep on evolving during the next years. I still remember watching the first image generation apps, being one of the most amazing ones (for me) the https://thispersondoesnotexist.com/ website that can infinitely create faces based on a StyleGAN (Generative Adversarial Network) , this stage also includes the incredible generative pre-trained transformer architectures (GPT) which we started using with text generation (i.e. ChatGPT) and later expanded to vision, speech recognition, robotics and multimodal (can receive multiple kinds of inputs and generate multiple kinds of outputs).

-

The Agentic AI: which started making noise on 2024 and is staged to “eat” some industries on 2025, exemplified by various tools already making waves in the industry, including Code Assistants, Customer Service Agents and Patient Care. We have already seen a lot of coding assistants that can no only “generate” code on a user request, but actually have “agency” on planning and executing a plan to achieve a goal (with or without user intervention), i.e. Cursor, Replit Agents, Github Copilot, Amazon Q, Bolt, etc. This stage built upon the “intelligence” of the previous stage (Large Language Models - LLMs) but have an action layer that allowed LLMs to use tools and functions to act upon the “environment” in a loop that can go on infinitely until a particular state or goal is achieved (although usually there’s a stop condition).

-

Physical AI: which mentions Self-Driving Cars and General Robotics. The key word here is General (such as in AGI Artificial General Intelligence), although we have been living for a while with robots (i.e. Roomba the robot vaccum cleaner) and self-driving cars (i.e. Waymo), general robotics represent a stage where this robots can take on general tasks and activities that humans can do but used to be difficult for machines and have “unconstrained” programming such as the one in industrial robots (which have been around for a while now).

So once generative AI was made general available, the beginning of disruption of many domains started, but that disruption was often limited by the “intelligence” of such LLMs.

Test Time Thinking

As more compute resources and data were used during training (pre-training scaling) the level of “intelligence” kept increasing.

RLHF and other methods represent the post-training scaling: this methods improved LLM’s performance but they still had hallucinations and non-consistent behaviour, even when optimized prompting, fine-tuning or grounding through RAG allowed scaling to move on.

It was during the last months of 2024 when some researchers tipped that just adding more and better data to the training model phase was hitting a wall.

Along with the presentation of OpenAI o3 models (Dec 2024), and it’s impressive numbers on some of the most difficult AGI benchmarks (Arcprize), a paper published on Nov 2024 https://arxiv.org/pdf/2411.07279 described “test time scaling” or “reasoning”, a mechanism through which LLM’s could improve their performance, by increasing compute use during inference.

Test time compute, test time reasoning or test time training, uses the task at hand to create a specific dataset on which it trains it’s LORA parameters (Low-Rank Adaptation), this a fast optimization that can allow the model to “specialize” on a particular problem, then it generates a set of candidate solutions, that can be thought of as analyzing the problem from a set of different perspectives, before selecting the most promising answers through a voting system.

Having this specialization process during inference has the trade-off of requiring a lot of additional compute compared to the simple inference used on previous models, and at least on this first approaches, the specialization is not persisted into the model, meaning the LORA optimization is scraped after use, this prevents overfitting and preserves the generalization capabilities, but probably in the future caching strategies will be implemented to make faster decisions when time or compute are important.

When reading about Test Time Training couldn’t help but remember this sketch by Tim Urban, although it is not directly related to AI or TTT, it shows how decisions sometimes create a tree of future posibilites, and as the new generation of AI systems, we usually need to strike a balance between speed and “intelligence” when providing solutions to problems.

We are usually expecting AI models to give us solutions on demand and fast, but this new models will be used to tackle problems or decisions that have further consequences, how prepared are we for this kind of “long-term thinking”.

We think a lot about those black lines, forgetting that it’s all still in our hands. pic.twitter.com/RSZ1d3W642

— Tim Urban (@waitbutwhy) March 5, 2021

While 2025 starts to unfold, Microsoft already announced an 80B investment in infrastructure, some Cloud providers are already starting to plan on alternative energy sources (nuclear power plants) for the datacenters, and the new administration is forecasting several multiples of current energy requirements for AI over the next years. Test Time Compute will probably take part on a set of decision by AI systems as intelligent or more intelligent than our current top performers, how is this power going to change our lives?

Closing Conclusion:

- AI Scaling is happening in different tracks and domains at the same time.

- New AI functionalities keep on pushing, but have to handle limited “reasoning” for the time being, but expecting future improvements.

- Energy sources will play a critical role in AI’s future

- The uses cases for Test Time Reasoning probably have a combination of high cost (because of computation) but also probably high cost in terms of the decisions that depend on them.

As AI keeps evolving we must start looking after fast and slow thinking use cases, and how those apply to our business or knowledge domains. Understanding the possible futures is fundamental if we want to be part of them.

Test Time Thinking

References:

- https://www.energypolicy.columbia.edu/projecting-the-electricity-demand-growth-of-generative-ai-large-language-models-in-the-us/

- https://www.ikangai.com/test-time-training-a-breakthrough-in-ai-problem-solving/

- https://arcprize.org/blog/oai-o3-pub-breakthrough#:~:text=Effectively%2C%20o3%20represents%20a%20form,prior%20(the%20base%20LLM).